What is Cache?

Least Frequently Used (LFU)

In LFU we check the old page as well as the frequency of that page and if the frequency of the page is larger than the old page we cannot remove it and if all the old pages are having same frequency then take last i.e FIFO method for that and remove that page.

Least Recently Used (LRU)

In case of LRU, we evict least recently used entry. As Cache purpose is to provide fast and efficient way of retrieving data. it need to meet certain requirement.

Strengths Of LRU Cache

How to implement LRU cache in Java using HashMap and Doubly Linked List?

In Least Recently Used Cache, we need to evict least recently used entry and also provide fast and efficient way of retrieving data. The LRU cache must be:

-K Himaanshu Shuklaa..

- As its expensive to every-time fetch data from the main storage, we story it in temporary location from where it can be retrieved faster. This temporary location is called Cache.

- A Cache is made of pool of entries and these entries are a copy of real data which are in storage and it is tagged with a tag (key identifier) value for retrieval.

- When an application receives a request to get a particular information, it is first checked in cache. If an entry is found with a tag matching with the request, it will be returned. Else it will be fetched from the main storage, kept in Cache and then returned to the client.

- When a request is received and if an entry is found with a tag matching in the Cache, it is called Cache Hit.

- When the tag isn’t found in the cache it is known as cache miss. In case of cache miss data is retrieved from the main storage and the data is placed in the cache, so in future hits it will be found and will make a cache hit.

- In case of cache miss, data is fetched from the back storage, and placed on the cache. But how much space the data we just fetched takes in the cache memory? This is known as storage cost.

- When we need to load the data we need to know how much does it take to load the data. This is known as retrieval cost.

- Sometimes we need to update the details present in the cache with the latest details from the back storage to keep our cache up-to-date, this is known as Invalidation.

- Entry will be invalidate from cache and fetched again from the back storage to get an updated version.

- Cache Miss need to be handled carefully. Before putting the data in cache, we need to check if space is available in Cache or not.

- If the cache is full, we need to make space for this newly retrieved data, so that we can place it in Cache. This is done by replacement policy (caching algorithms), which decide which entry will be remove to make more room.

Least Frequently Used (LFU)

In Least Frequently Used caching algorithm the least frequently used cache block is removed whenever the cache is overflowed.

In LFU we check the old page as well as the frequency of that page and if the frequency of the page is larger than the old page we cannot remove it and if all the old pages are having same frequency then take last i.e FIFO method for that and remove that page.

In case of LRU, we evict least recently used entry. As Cache purpose is to provide fast and efficient way of retrieving data. it need to meet certain requirement.

Strengths Of LRU Cache

- Fast accesses: LRU caches store items in order from most-recently used to least-recently used. That means both can be accessed in O(1) time.

- Fast updates: Each time an item is accessed, updating the cache takes O(1) time.

- Heavy Space: An LRU cache tracking n items requires a linked list of length n, and a hash map holding n items. That's O(n) space, but it's still two data structures (as opposed to one).

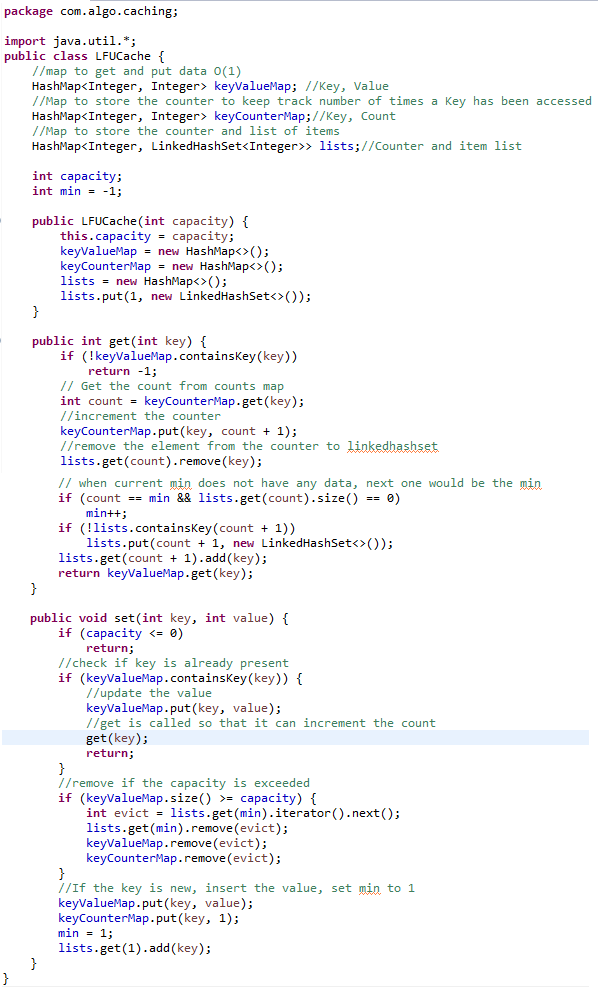

- Let's say we need to remove least frequently used item from the cache to put the new data into the cache, but the requirements are to put(K, V) and get(K) is to be done in O(1). To put and get data in Java in O(1), we need to use Map (HashMap < K, V > ).

- Since we need to find the least frequently used item, we need a counter to keep track number of times a Key(K) has been accessed (get or put). For this we need another Map < K, C > , where K is the key of the item and C is the counter.

- We need a list as well to store the information of count and items key. Let's say item A is used 7 times, where as B is used 9 times. We need to store that information such a way that will hold the items in a list based on their insertion order(FIFO). To achieve that we can use HashSet < K > and more precisely LinkedHashSet < K > . Since we want to keep track of the counter as well we need another map, HashMap < K,LinkedHashSet < K > >

Least Frequently Used (LFU) Implementation In Java

GIT URL: LFU Cache In Java

How to implement LRU cache in Java using HashMap and Doubly Linked List?

In Least Recently Used Cache, we need to evict least recently used entry and also provide fast and efficient way of retrieving data. The LRU cache must be:

- Fixed Size

- Fast Access: Insert and retrieval operation should be fast, preferably O(1) time.

- Replacement of Entry when the memory is reached: A cache should have efficient algorithm to evict the entry when memory is full.

- For O(1) lookup, we can use HashMap, but HashMap does not has mechanism of tracking which entry has been queried recently and which is not.

- To do this we need another data-structure which provide fast insertion, deletion and updation.

- For LRU we can use Doubly Linkedlist, because it will give O(1) deletion, updation and insertion if we have the address of Node on which this operation has to perform.

- To implement LRU cache, we need a HashMap and a Doubly LinkedList. The HashMap will hold the keys and address of the nodes of Doubly LinkedList, where as the Doubly LinkedList will hold the values of keys.

- To keep track of recently used entries, whenever any entry is accessed it will removed from the position where it's present and it then it will be added at the start of linkedlist. By moving the entry in the top (whenever its accessed), we will keep all the recently used entries on the top and least used will be in the bottom.

Least Recently Used (LRU) Implementation In Java

GIT URL: LRU Cache In Java

How can we implement Least Recently Used(LRU) Cache using LinkedHashMap?

To implement LRUCache, we need to extend LinkedHashMap. FYI, the LinkedHashMap can order the elements in Insertion order as well as Access order.

ALSO CHECK: How LinkedHashMap works internally in Java?

By default, LinkedHashMap maintains the data in Insertion order. However we can configure LinkedHashMap to maintain the data in Access order by setting the accessOrder flag to true.

In our LRUCache we will set accessOrder flag to true in its three argument copy constructor. Additionally, we will override method removeEldestEntry that LinkedHashMap calls after every put method call to check whether it should remove the eldest element. In our implementation, we will return true when size becomes greater than the capacity to let LinkedHashMap remove the least recently accessed element.

How can we implement Least Recently Used(LRU) Cache using LinkedHashMap?

To implement LRUCache, we need to extend LinkedHashMap. FYI, the LinkedHashMap can order the elements in Insertion order as well as Access order.

ALSO CHECK: How LinkedHashMap works internally in Java?

By default, LinkedHashMap maintains the data in Insertion order. However we can configure LinkedHashMap to maintain the data in Access order by setting the accessOrder flag to true.

In our LRUCache we will set accessOrder flag to true in its three argument copy constructor. Additionally, we will override method removeEldestEntry that LinkedHashMap calls after every put method call to check whether it should remove the eldest element. In our implementation, we will return true when size becomes greater than the capacity to let LinkedHashMap remove the least recently accessed element.

No comments:

Post a Comment