December 31, 2019

December 26, 2019

Shishir Sharma roped in to play RAW chief in Ullu App's Peshawar..

December 16, 2019

December 15, 2019

December 13, 2019

December 12, 2019

Priyaank Sharma relives fond childhood memories with cousins Shraddha Kapoor and Siddhanth Kapoor

Actress Padmini Kolhapure’s son Priyaank Sharma is all set to make his Bollywood debut with Karan Vishwanath Kashyap’s Sab Kushal Mangal. While the budding actor is gearing up for the release of his upcoming rom-com alongside newbie Riva Kishan and Akshaye Khanna, the fact that he is a star-kid never bothered him.

December 08, 2019

What is API Gateway?

What is the API Gateway pattern?

Let's start with the use case. Assume we are developing an application, where users can purchase the products. Here is the list of web-services available:

- /home

- /productdetails

- /productdetails/add

- /productdetails/delete

- /cartdetails/get

- /cartdetails/add

HATEOAS and Richardson Maturity Model

The Richardson Maturity Model (RMM) is a model developed by Leonard Richardson that helps organize your REST APIs into four levels. It is proposed by Leonard Richardson. Here are the four levels of RMM:

- Level 0: The Swamp of POX

- Level 1: Resources

- Level 2: HTTP Verbs

- Level 3: Hypermedia Control

December 07, 2019

Part 17: Microservices (CQRS and Event Sourcing)

Event Sourcing

A shared database is not recommended in a microservices-based approach, because, if there is a change in one data model, then other services are also impacted. As part of microservices best practices, each microservice should have its own database.

Let's say you have Customer and Order microservices running in their seprate containers. While the Order service will take care of creating, deleting, updating, and retrieving order data, the Customer service will work with customer data.

A shared database is not recommended in a microservices-based approach, because, if there is a change in one data model, then other services are also impacted. As part of microservices best practices, each microservice should have its own database.

Let's say you have Customer and Order microservices running in their seprate containers. While the Order service will take care of creating, deleting, updating, and retrieving order data, the Customer service will work with customer data.

December 06, 2019

Part 16: Microservices (Implementing Circuit Breaker and Bulkhead patterns using Resilience4j)

Circuit Breaker Pattern

Circuit breaker is a resilience pattern. This pattern help prevent cascading failures. A circuit breaker is used to isolate a faulty service, it wraps a fragile function call (or an integration point with an external service) in a special (circuit breaker) object, which monitors for failures. Once the failures reach a certain threshold, the circuit breaker trips, and all further calls to the circuit breaker return with an error, without the protected call being made at all.

ALSO READ: Part 6: Microservices (Fault Tolerance, Resilience, Circuit Breaker Pattern)

Circuit breaker is a resilience pattern. This pattern help prevent cascading failures. A circuit breaker is used to isolate a faulty service, it wraps a fragile function call (or an integration point with an external service) in a special (circuit breaker) object, which monitors for failures. Once the failures reach a certain threshold, the circuit breaker trips, and all further calls to the circuit breaker return with an error, without the protected call being made at all.

ALSO READ: Part 6: Microservices (Fault Tolerance, Resilience, Circuit Breaker Pattern)

December 05, 2019

Part 15: Microservices (Saga Pattern)

Observability

Every application relies on data and the success or failure of any business relies on efficient data management.

Data Management in a monolithic system can get pretty complex. However, it could be a completely different if you are using Microservices architecture.

Here are a couple of data management patterns for microservices:

Every application relies on data and the success or failure of any business relies on efficient data management.

Data Management in a monolithic system can get pretty complex. However, it could be a completely different if you are using Microservices architecture.

Here are a couple of data management patterns for microservices:

- Database per Service - each service has its own private database

- Shared database - services share a database

- Saga - use sagas, which a sequences of local transactions, to maintain data consistency across services

- API Composition - implement queries by invoking the services that own the data and performing an in-memory join

- CQRS - implement queries by maintaining one or more materialized views that can be efficiently queried

- Domain event - publish an event whenever data changes

- Event sourcing - persist aggregates as a sequence of events

Part 14: Microservices (Observability Patterns)

Observability

- Log aggregation - aggregate application logs

- Application metrics - instrument a service’s code to gather statistics about operations

- Audit logging - record user activity in a database

- Distributed tracing - instrument services with code that assigns each external request an unique identifier that is passed between services. Record information (e.g. start time, end time) about the work (e.g. service requests) performed when handling the external request in a centralized service

- Exception tracking - report all exceptions to a centralized exception tracking service that aggregates and tracks exceptions and notifies developers.

- Health check API - service API (e.g. HTTP endpoint) that returns the health of the service and can be pinged, for example, by a monitoring service

- Log deployments and changes

December 04, 2019

Part 13: Microservices (Deployment Patterns)

Deployment Patterns

- Multiple service instances per host - deploy multiple service instances on a single host

- Service instance per host - deploy each service instance in its own host

- Service instance per VM - deploy each service instance in its VM

- Service instance per Container - deploy each service instance in its container

- Serverless deployment - deploy a service using serverless deployment platform

- Service deployment platform - deploy services using a highly automated deployment platform that provides a service abstraction

Part 12: Microservices (Decomposition)

How to decompose an application into services?

1). Decompose by business capability pattern: It define services corresponding to business capabilities

2). Decompose by subdomain pattern: It define services corresponding to DDD subdomains

3). Self-contained Service pattern: The design services to handle synchronous requests without waiting for other services to respond

4). Service per team

1). Decompose by business capability pattern: It define services corresponding to business capabilities

2). Decompose by subdomain pattern: It define services corresponding to DDD subdomains

3). Self-contained Service pattern: The design services to handle synchronous requests without waiting for other services to respond

4). Service per team

Part 11: Microservices(Spring Cloud Config Server)

Cross Cutting Concerns

Cross cutting concerns can be handled by:

- Microservice chassis - a framework that handles cross-cutting concerns and simplifies the development of services.

- Externalized configuration - externalize all configuration such as database location and credentials.

We can configure properties in application.properties. Configuration can be achieved in many ways:

1). A single application.properties inside the jar.

2). Multiple application.properties inside the jar for different profiles.

3). application.properties outside the jar, kept at the same location where the jar is present. When the jar is executed this application.properties will override the one present inside the jar.

4). application.properties for different profiles at some different location, while starting the jar we can pass the active profile and the path of property files.

Part 10: Microservices (Configuring Spring Boot Application: @Value, @ConfigurationProperties)

Spring Boot lets you externalize your configuration so that you can work with the same application code in different environments. You can use properties files, YAML files, environment variables, and command-line arguments to externalize configuration.

Using property file config with Spring Boot

Let's say you have configured below property in Spring Boot's application.properties:

our.greeting=Hello

Now you want to use this property in your application. Let's say we have greetMe() API inside GreetingController, this API returns the message configured in property file. To read the message from the property file, we can use 'value injection'. We can inject the property values directly into our beans by using the @Value annotation. e.g:

Using property file config with Spring Boot

Let's say you have configured below property in Spring Boot's application.properties:

our.greeting=Hello

Now you want to use this property in your application. Let's say we have greetMe() API inside GreetingController, this API returns the message configured in property file. To read the message from the property file, we can use 'value injection'. We can inject the property values directly into our beans by using the @Value annotation. e.g:

December 03, 2019

Part 9: Microservices (Bulkhead Pattern using Hystrix)

What is Bulkhead Pattern?

Bulkheads in ships separate components or sections of a ship such that if one portion of a ship is breached, flooding can be contained to that section.

Once contained, the ship can continue operations without risk of sinking.

In this fashion, ship bulkheads perform a similar function to physical building firewalls, where the firewall is meant to contain a fire to a specific section of the building.

The microservice bulkhead pattern is analogous to the bulkhead on a ship. The goal of the bulkhead pattern is to avoid faults in one part of a system to take the entire system down. By separating both functionality and data, failures in some component of a solution do not propagate to other components. This is most commonly employed to help scale what might be otherwise monolithic datastores.

Bulkheads in ships separate components or sections of a ship such that if one portion of a ship is breached, flooding can be contained to that section.

Once contained, the ship can continue operations without risk of sinking.

In this fashion, ship bulkheads perform a similar function to physical building firewalls, where the firewall is meant to contain a fire to a specific section of the building.

The microservice bulkhead pattern is analogous to the bulkhead on a ship. The goal of the bulkhead pattern is to avoid faults in one part of a system to take the entire system down. By separating both functionality and data, failures in some component of a solution do not propagate to other components. This is most commonly employed to help scale what might be otherwise monolithic datastores.

Part 8: Microservices (Hystrix Dashboard)

What is Hystrix Dashboard and how can we add it in our Spring Boot App?

Hystrix also provides an optional feature to monitor all of your circuit breakers in a visually-friendly fashion.

Steps to add Hystrix Dashboard:

1). We need to add spring-cloud-starter-netflix-hystrix-dashboard and spring-boot-starter-actuator in the pom.xml.

2). To enable it we have to add the @EnableHystrixDashboard annotation to our main class.

3). Also, in our application.properties let's include the stream, 'management.endpoints.web.exposure.include= hystrix.stream'. Doing so exposes the /actuator/hystrix.stream as a management endpoint.

Part 7: Microservices (Hystrix)

What is Hystrix?

Hystrix Demo

In the blog post part 5, we had created three projects depart-employee-details, department-details and employee-details, and these projects have getDetails,

getDepartmentDetails and getEmployeeDetails API's respectively.

getDetails is calling getDepartmentDetails and then for each department its fetching the employee information by calling getEmployeeDetails, after this it returns the consolidated result.

You can download the code till blog-post 5 from below url:

GIT URL: microservices

Now we will add the Circuit Breaker Pattern in it. Where we are going to add it? Since getDetails is calling two other web-services, we will add the circuit breaker in it getDetails API.

Step 1). Let's add the Hystrix dependency in 'depart-employee-details'.

- Its an open source library originally created by Netflix.

- It implements the circuit breaker pattern, so we don't have to implement it. It give us the configuration params based on which circuit open and close.

- The Hystrix framework library helps to control the interaction between services by providing fault tolerance and latency tolerance. It improves overall resilience of the system by isolating the failing services and stopping the cascading effect of failures.

- For example, when you are calling a 3rd party API, which is taking more time to send the response, the control goes to the fallback method and returns the custom response to your application.

- The best part is it works well with Spring Boot.

- The sad part is Hystrix its no longer under active development now, it has been maintained right now.

- Add Maven dependency for 'spring-cloud-starter-netflix-hystrix'

- Add @EnableCircuitBreaker annotation to the application class.

- Add @HystrixCommand to the methods that need circuit breakers.

- Configure Hystrix behaviour (adding the parameters).

How does Hystrix work?

For the Circuit Breaker to work, Hystix will scan @Component or @Service annotated classes for @HystixCommand annotated methods.

Any method annotated with @HystrixCommand is managed by Hystrix, and therefore, is wrapped by a proxy that manages all calls to that method through a separate, initially fixed thread pool.

FYI, @HystrixCommand with an associated fallback method. This fallback has to use the same signature as the ‘original’.

Any method annotated with @HystrixCommand is managed by Hystrix, and therefore, is wrapped by a proxy that manages all calls to that method through a separate, initially fixed thread pool.

FYI, @HystrixCommand with an associated fallback method. This fallback has to use the same signature as the ‘original’.

In the blog post part 5, we had created three projects depart-employee-details, department-details and employee-details, and these projects have getDetails,

getDepartmentDetails and getEmployeeDetails API's respectively.

getDetails is calling getDepartmentDetails and then for each department its fetching the employee information by calling getEmployeeDetails, after this it returns the consolidated result.

You can download the code till blog-post 5 from below url:

GIT URL: microservices

Now we will add the Circuit Breaker Pattern in it. Where we are going to add it? Since getDetails is calling two other web-services, we will add the circuit breaker in it getDetails API.

Step 1). Let's add the Hystrix dependency in 'depart-employee-details'.

< groupid > org.springframework.cloud < /groupid >

< artifactid > spring-cloud-starter-netflix-hystrix < /artifactid >

< version > 2.2.3.RELEASE < /version >

< /dependency>

Step 2). Add @EnableCircuitBreaker annotation to the application class of 'depart-employee-details'.

Step 3 &4). Add @HystrixCommand to the methods that need circuit breakers and configure behavior.

getDetails API of 'depart-employee-details' is calling two other API's, so we will add @HystrixCommand on this API.

To test whether its working fine or not, start discovery-server and then depart-employee-details. Open the browser and hit http://localhost:8081/details/getDetails. This will show you hadcoded output from fallback method.

FYI, we have not started employee-details and department-details, that's why when getDetails API tries to call getDepartmentDetails and getEmployeeDetails it gets an error (since services are down) and returns the result from the fallback method 'fallbackgetDetails()'

Download the code till now from below GIT url:

GIT URL: microservices

Refactoring for granular fallback and Configuring Hystrix parameters

The getDetails API of 'depart-employee-details' is calling getDepartmentDetails API of 'department-details' and then getEmployeeDetails API of 'employee-details' project.

As of now we added the fallback in getDetails, but now we make it more granular.

Instead of adding fallback for the wrapper API (i.e getDetails), we will add fallbacks for both getDepartmentDetails and getEmployeeDetails. For that we need to create two service classes DepartmentService and EmployeeService in 'depart-employee-details'. We need to make modification in getDetails [it will now call API's via newly created service classes].

|

| DepEmpResource.java |

|

| EmployeeService.java |

|

| DepartmentService.java |

Why we created new Service classes?

We could have added the fall back methods for both getDepartmentDetails and getEmployeeDetails in the DepEmpResource [where getDetails API is present], still we created separated service classes to get Department and Employee details. Why?

Well, the hystrix create a proxy class for the class where we add @HystrixCommand. If we create fallback methods in DepEmpResource, it would create a single proxy call from DepEmpResource and fail to call the separate fall backs.

To test whether its working fine or not, start discovery-server and then depart-employee-details. Open the browser and hit http://localhost:8081/details/getDetails. This will show you hadcoded output from fallback method.

FYI, we have not started employee-details and department-details, that's why when getDetails API tries to call getDepartmentDetails and getEmployeeDetails it gets an error (since services are down) and returns the result from the fallback method 'fallbackgetDetails()'

Download the code till now from below GIT url:

GIT URL: microservices

-K Himaanshu Shuklaa..

December 02, 2019

Part 6: Microservices (Fault Tolerance, Resilience, Circuit Breaker Pattern)

What Is Fault Tolerance and Resilience?

As per Wikipedia, Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of some of its components.

If there is a fault, what is the impact of that fault in the application is Fault Tolerance.

Resilience is the capacity to recover quickly after the failure. Resilience is how many faults a system can tolerate before its brought down to its knees.

As per Wikipedia, Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of some of its components.

If there is a fault, what is the impact of that fault in the application is Fault Tolerance.

Resilience is the capacity to recover quickly after the failure. Resilience is how many faults a system can tolerate before its brought down to its knees.

Part 5: Microservices Demo (Service Discovery using Eureka)

Why use Service Discovery?

Service discovery is how applications and (micro)services locate each other on a network. Service discovery implementations include both: a central server (or servers) that maintain a global view of addresses and clients that connect to the central server to update and retrieve addresses.

Let’s imagine that we are writing some code that invokes a service that has a REST API. In order to make a request, our code needs to know the network location (IP address and port etc) of a service instance.

In a traditional application running on physical hardware, the network locations of service instances are relatively static. For example, our code can read the network locations from a configuration file that is occasionally updated. In a modern, cloud-based microservices application, however, this is a much more difficult problem to solve.

Service instances have dynamically assigned network locations. Moreover, the set of service instances changes dynamically because of autoscaling, failures, and upgrades. Consequently, our client code needs to use a more elaborate service discovery mechanism.

Service discovery is how applications and (micro)services locate each other on a network. Service discovery implementations include both: a central server (or servers) that maintain a global view of addresses and clients that connect to the central server to update and retrieve addresses.

Let’s imagine that we are writing some code that invokes a service that has a REST API. In order to make a request, our code needs to know the network location (IP address and port etc) of a service instance.

In a traditional application running on physical hardware, the network locations of service instances are relatively static. For example, our code can read the network locations from a configuration file that is occasionally updated. In a modern, cloud-based microservices application, however, this is a much more difficult problem to solve.

Service instances have dynamically assigned network locations. Moreover, the set of service instances changes dynamically because of autoscaling, failures, and upgrades. Consequently, our client code needs to use a more elaborate service discovery mechanism.

Part 4: Microservices Demo

We will develop a microservice using Spring Cloud which will return details about all the departments that exist, along with all the employees working in a particular department.

December 01, 2019

Part 3: Microservices Interview Questions And Answers

A Mock is generally a dummy object where certain features are set into it initially. Its behavior mainly depends on these features, which are then tested.

A Stub is an object that helps in running the test. It functions in a fixed manner under certain conditions. This hard-coded behavior helps the stub to run the test.

Part 2: Microservices Interview Questions And Answers

Name three commonly used tools for Microservices

Wiremock, Docker, and Hystrix are important Microservices tools.

Why do we need Containers for Microservices?

To manage a microservice-based application, containers are the easiest alternative. They play a crucial role in the deployment and management of microservices architectures.

a). Isolation: Containers encapsulate the application and its dependencies, providing a lightweight, isolated environment. Each microservice can run in its own container, ensuring that it has everything it needs to operate without interfering with other services. This isolation helps in avoiding conflicts between dependencies and provides consistency across different environments.

b). Scalability: Containers are designed to be easily scalable. Microservices often require dynamic scaling to handle varying workloads. Containers can be quickly started or stopped, making it easier to scale individual microservices independently based on demand. This elasticity allows for efficient resource utilization and cost management.

c). Portability: Containers are highly portable and can run consistently across various environments, including development, testing, and production. This ensures that a microservice behaves the same way regardless of the underlying infrastructure. This portability simplifies the deployment process and supports a "write once, run anywhere" philosophy.

d). Orchestration: Microservices often involve the coordination and orchestration of multiple services. Container orchestration tools, such as Kubernetes and Docker Swarm, help manage the deployment, scaling, and lifecycle of containers. They automate tasks like load balancing, service discovery, and rolling updates, simplifying the management of complex microservices architectures.

e). Dependency Management: Containers package an application along with its dependencies, libraries, and runtime, ensuring that the microservice runs consistently across different environments. This helps eliminate the common problem of "it works on my machine" by creating a consistent environment from development to production.

f). Fast Deployment: Containers can be started or stopped quickly, allowing for fast deployment and updates. This agility is crucial for microservices, where frequent updates and releases are common. It supports practices like continuous integration and continuous deployment (CI/CD), facilitating a more agile and responsive development process.

What is the use of Docker?

Docker offers a container environment that can be used to host any application. This software application and the dependencies that support it are tightly packaged together.

Kafka Part 10: Implement Exactly Once Processing in Kafka

Let's say we are designing a system using Apache Kafka which will send some kind of messages from one system to another. While designing to need to consider below questions:

- How do we guarantee all messages are processed?

- How do we avoid/handle duplicate messages?

Part 1: Microservices Interview Questions And Answers (Monolithic vs Microservices)

What is monolithic architecture?

- Monolith means something which is composed all in one piece.

- The Monolithic application describes a single-tiered software application in which different components are combined into a single program from a single platform. The monolithic software is designed to be self-contained. The components of the program are interconnected and interdependent rather than loosely coupled as is the case with modular software programs.

- In a tightly-coupled architecture, each component and its associated components must be present in order for code to be executed or compiled.

- Also, if we need to update any program component we need to rewrite the whole application, whereas, in a modular application, any separate module e.g a microservice can be changed without affecting other parts of the program. Unlike monolithic architecture, the modules of modular architectures are relatively independent which in turn reduces the risk that a change made within one element will create unanticipated changes within other elements. Also, modular programs also lend themselves to iterative processes more readily than monolithic programs.

Kafka Part 9: Compression

Compression In Kafka

Data is send from producer to the Kafka in the text format, commonly called the JSON format. JSON has a demerit because data is stored in the string form and most of the time this creates several duplicated records to get stored in the Kafka topic. Which occupies much disk space. That's why we need compression.

#Neo4j Part 8:Interview Questions & Answers

How files are stored in Neo4j?

Neo4j stores graph data in a number of different store files, and each store file consists of the data for a specific part of the graph(relationships, nodes, properties etc), e.g: Neostore.nodestore.db, neostore.propertystore.db and so on.

Neo4j stores graph data in a number of different store files, and each store file consists of the data for a specific part of the graph(relationships, nodes, properties etc), e.g: Neostore.nodestore.db, neostore.propertystore.db and so on.

Kafka Part 8: Batch Size and linger.ms

What is a Producer Batch and Kafka’s batch size?

- A producer writes messages to the Kafka, one-by-one. It waits for the messages that are being produced to Kafka. Then, it creates a batch and put the messages into it, until it becomes full. Then, send the batch to the Kafka. Such type of batch is known as a Producer Batch.

- We can say Kafka producers buffer unsent records for each partition. Size of these buffers is specified in the batch.size of config file. Once the buffer is full messages will be send.

- The default batch size is 16KB, and the maximum can be anything. Large is the batch size, more is the compression, throughput, and efficiency of producer requests. The larger messages seem to be disproportionately delayed by small batch sizes.

- The message size should not exceed the batch size. Otherwise, the message will not be batched. Also, the batch is allocated per partitions, so do not set it to a very high number.

Kafka Part 7: Why ZooKeeper is always configured with odd number of nodes?

Let's understand a few basics:

ZooKeeper is a highly-available, highly-reliable and fault-tolerant coordination and consensus service for distributed applications like Apache Storm or Kafka. Highly-available and highly-reliable is achieved through replication.

ZooKeeper is a highly-available, highly-reliable and fault-tolerant coordination and consensus service for distributed applications like Apache Storm or Kafka. Highly-available and highly-reliable is achieved through replication.

Kafka Part 6: Assign and Seek

Assign

When we work with consumer groups, the partitions are assigned automatically to consumers and are rebalanced automatically when consumers are added or removed from the group.

ALSO READ: Kafka Consumer Group, Partition Rebalance, Heartbeat

Sometimes we need a single consumer that always read data from all the partitions in a topic, or from a specific partition in a topic. In this case, there is no reason for groups or rebalancing. We just assign the consumer-specific topic and/or partitions, consume messages, and commit offsets on occasion.

When we work with consumer groups, the partitions are assigned automatically to consumers and are rebalanced automatically when consumers are added or removed from the group.

ALSO READ: Kafka Consumer Group, Partition Rebalance, Heartbeat

Sometimes we need a single consumer that always read data from all the partitions in a topic, or from a specific partition in a topic. In this case, there is no reason for groups or rebalancing. We just assign the consumer-specific topic and/or partitions, consume messages, and commit offsets on occasion.

Kafka Part 5: Consumer Group, Partition Rebalance, Heartbeat

What is a Consumer Group?

Consumer Groups is a concept exclusive to Kafka. Every Kafka consumer group consists of one or more consumers that jointly consume a set of subscribed topics.

Let's say we have an application, which read messages from a Kafka topic, perform some validations and so some calculations, and write the results to another data store.

In this case our application will create a consumer object, subscribe to the appropriate topic, and start receiving messages, validating them and writing the results.

This may work well for a while, but imagine a scenario when the rate at which producers write messages to the topic exceeds the rate at which your application can validate them?

Consumer Groups is a concept exclusive to Kafka. Every Kafka consumer group consists of one or more consumers that jointly consume a set of subscribed topics.

Let's say we have an application, which read messages from a Kafka topic, perform some validations and so some calculations, and write the results to another data store.

In this case our application will create a consumer object, subscribe to the appropriate topic, and start receiving messages, validating them and writing the results.

This may work well for a while, but imagine a scenario when the rate at which producers write messages to the topic exceeds the rate at which your application can validate them?

Kafka Part 4: Consumers

We have learned how to create Kafka Producer in the previous part of Kafka series. Now we will create Kafka consumer.

Reading data from Kafka is a bit different than reading data from any other messaging systems. Applications that need to read data from Kafka use a KafkaConsumer to subscribe to Kafka topics and receive messages from these topics.

In this blog post, we will discuss about the interview questions related to kafka Consumers and we will also create our own consumer.

Reading data from Kafka is a bit different than reading data from any other messaging systems. Applications that need to read data from Kafka use a KafkaConsumer to subscribe to Kafka topics and receive messages from these topics.

In this blog post, we will discuss about the interview questions related to kafka Consumers and we will also create our own consumer.

Kafka Part 3: Kafka Producer, Callbacks and Keys

What is the role of Kafka producer?

The primary role of a Kafka producer is to take producer properties and record as inputs and write it to an appropriate Kafka broker. Producers serialize, partitions, compresses and load balances data across brokers based on partitions.

The workflow of a producer involves five important steps:

The primary role of a Kafka producer is to take producer properties and record as inputs and write it to an appropriate Kafka broker. Producers serialize, partitions, compresses and load balances data across brokers based on partitions.

The workflow of a producer involves five important steps:

- Serialize

- Partition

- Compress

- Accumulate records

- Group by broker and send

Kafka Part 2: Kafka Command Line Interface (CLI)

Now we will create topics from CLI.

- Open another command prompt, execute 'cd D:\Softwares\kafka_2.12-2.3.0\bin\windows'.

- Make sure zookeeper and kafka broker is running.

- Now execute 'kafka-topics --zookeeper 127.0.0.1:2181 --topic first_topic --create --partitions 3 --replication-factor 1'. This will create a kafka topic with name 'first_topic', with 3 partitions and replication-factor as 1 (in our case we cannot mention replication-factor more than 1 because we have started only one broker).

- After executing the above command you will get a message 'Created topic first_topic'.

- How can we check if our topic is actually created? In the same command prompt, execute 'kafka-topics --zookeeper 127.0.0.1:2181 --list'. This will list all the topics which are present.

- To get the more details about the topic which is created, execute 'kafka-topics --zookeeper 127.0.0.1:2181 --topic first_topic --describe'

Kafka Part 1: Basics

What is Apache Kafka?

Apache Kafka is a publish-subscribe messaging system developed by Apache written in Scala. It is a distributed, partitioned and replicated log service. It is horizontally scalable, fault tolerant and fast messaging system.

Why Kafka?

Let's say we have a source system and a target system, where in the source consumes data from target system. In a simplest case, we have one source and one target system,so it would be easy for a source system to connect with the target. But now lets say there are x number of sources and y number of targets, and each source need to connect with all the targets. In this case it will become really difficult to maintain the whole system.

Apache Kafka is a publish-subscribe messaging system developed by Apache written in Scala. It is a distributed, partitioned and replicated log service. It is horizontally scalable, fault tolerant and fast messaging system.

Why Kafka?

Let's say we have a source system and a target system, where in the source consumes data from target system. In a simplest case, we have one source and one target system,so it would be easy for a source system to connect with the target. But now lets say there are x number of sources and y number of targets, and each source need to connect with all the targets. In this case it will become really difficult to maintain the whole system.

Lyrics and English Translation Of Baari

Tenu Takeya Hosh Hi Bhul Gayi,

Garm Garm Chaa Hath Te Dul Gayi,

As I saw you, I lost my senses,

which lead me to spill the hot tea on my hand.

November 28, 2019

November 26, 2019

Minissha Lamba will foray into the digital space with Ullu App's Kasak

November 24, 2019

#Algorithms Part 3: Shortest Path Algorithms(Bellman-Ford Algorithm)

Bellman-Ford Algorithm

We need to first make list of edges. In our case it would be (d,b), (c,d), (a,c), (a,b).

1). Take the list of all edges and mark the distances of all the vertices's as infinity, keep the first one ('a' in our case) as zero. Now we need to go and relax the vertices's for v-1 times (where v is number of vertices's), in our case it will be three. Lets start with d to b, d is infinity and b is infinity, so the shortest path from d to b would be infinity plus infinity which is infinity. Now we take c to d, again since both have infinity, so the shortest path at d would be infinity. Now take a to c, it would be 0+2, which is 2, we will change the shortest path at c from infinity to 2. Now we take a to b, and change the shortest path at b from infinity to 1. We have relaxed the vertices's for 1st time.

2). We repeat the process again, from d to b the shortest path would be infinity (value at d) minus 6 (since the edge has negative value), this will gives infinity. But the value at b is 1, which is less than infinity, so we are not going to change it. Now we take c to d, the shortest path would be 2+3 which is 5, shortest path at d was infinity, we will change it to 5. Now we take a to c, which gives shortest path as 2, which is already 2, so we are not going to change it. Same is the case from a to b. We have relaxed the vertices's for 2nd time.

3). Again we will go from d to b, the shortest path would be 5-6, thats -1. The shortest path at b is 1, which is greater than -1, so we will change it to -1. Then we will go from c to d, a to c, a to b, but the values remain unchanged.

4). We have relaxed vertices's for 3rd time. (v-1).

Bellman-Ford fails if there is a negative cycle in the graph. Like in the below case b-c-d has a cycle and total value is 1 + 3 + (-6), which is -3.

- As we have seen in above example that Dijkstra Algorithm not always find the shortest path in case of negative edges. Bellman-Ford always give correct result even in case of negative edges (though it has its own drawback).

- As Dijkstra says relax the vertices's only once, Bellman-Ford says we can relax vertices's for n number of times, so that we can get perfect shortest path to all the vertices's.

- In Bellman-Ford we make list of all the edges and go one relaxing them for v-1 times (where v is the number of vertices) after which we will surely get the shortest path to all the edges even in case of negative edges.

We need to first make list of edges. In our case it would be (d,b), (c,d), (a,c), (a,b).

1). Take the list of all edges and mark the distances of all the vertices's as infinity, keep the first one ('a' in our case) as zero. Now we need to go and relax the vertices's for v-1 times (where v is number of vertices's), in our case it will be three. Lets start with d to b, d is infinity and b is infinity, so the shortest path from d to b would be infinity plus infinity which is infinity. Now we take c to d, again since both have infinity, so the shortest path at d would be infinity. Now take a to c, it would be 0+2, which is 2, we will change the shortest path at c from infinity to 2. Now we take a to b, and change the shortest path at b from infinity to 1. We have relaxed the vertices's for 1st time.

2). We repeat the process again, from d to b the shortest path would be infinity (value at d) minus 6 (since the edge has negative value), this will gives infinity. But the value at b is 1, which is less than infinity, so we are not going to change it. Now we take c to d, the shortest path would be 2+3 which is 5, shortest path at d was infinity, we will change it to 5. Now we take a to c, which gives shortest path as 2, which is already 2, so we are not going to change it. Same is the case from a to b. We have relaxed the vertices's for 2nd time.

3). Again we will go from d to b, the shortest path would be 5-6, thats -1. The shortest path at b is 1, which is greater than -1, so we will change it to -1. Then we will go from c to d, a to c, a to b, but the values remain unchanged.

4). We have relaxed vertices's for 3rd time. (v-1).

Bellman-Ford fails if there is a negative cycle in the graph. Like in the below case b-c-d has a cycle and total value is 1 + 3 + (-6), which is -3.

-K Himaanshu Shuklaa..

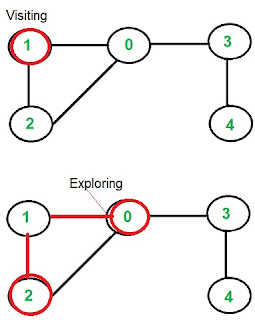

#Algorithms Part 1: Graphs, BFS,DFS, Dijkstra Algorithm, Bellman-Ford Algorithm

Graph Traversal

- Its the process of visiting and exploring a graph for processing.

- In this we visit and explore each vertex and edge in a graph such that all the vertices's are explored exactly once.

- Visiting a node means selecting a node, where as exploring means exploring the children nodes of the node which we have visited.

- Its an algorithm for traversing trees and graphs.

- In BFS we start with the root node, explores all sibling nodes before moving on to the next level of siblings.

- Queue is the main data structure which is used while performing BFS.

- BFS is used as a crawlers in web-engines. It is the main algorithm which is used for indexing the web pages, it starts from the source page and follows all the links associated with that page. In this case each link is considered as a node in the graph.

- BFS is also used in GPS navigation to find the neighboring locations.

- BFS is also used in finding the shortest path

Binary Search Trees, Balance Search Trees, 2-3 ST, Red- Black BST

Tree Data Structure

- If we compare sorted arrays with linked list: Search is fast in SA (O(logn)) and slow in LL (O(n)), but insert & delete is slow in SA (O(n)) and fast in LL (O(1)).

- Binary search trees are very balanced in the sense that all these common operations like insert, delete, and search take about the same time (log n).

- In Binary search trees we have the nodes, which contain the data. Nodes are connected to other nodes through edges. The top node of the tree is called the root node (in a tree data structure, there can only be one root node).

- A node which has no children is called a leaf node.

- Any node may be considered to be the root of a subtree, which consists of its children and its children's children and so on. The level of a node tells us how far it is from the root.

#Algorithms Part 4: Caching Algorithms in Java (LRU and LFU)

What is Cache?

- As its expensive to every-time fetch data from the main storage, we story it in temporary location from where it can be retrieved faster. This temporary location is called Cache.

- A Cache is made of pool of entries and these entries are a copy of real data which are in storage and it is tagged with a tag (key identifier) value for retrieval.

- When an application receives a request to get a particular information, it is first checked in cache. If an entry is found with a tag matching with the request, it will be returned. Else it will be fetched from the main storage, kept in Cache and then returned to the client.

Java Sorting Algorithms

Selection Sort

- The selection sort algorithm sorts an array by repeatedly finding the minimum element (considering ascending order) from unsorted part and putting it at the beginning.

- The algorithm maintains two subarrays in a given array: (a).The subarray which is already sorted. (b). Remaining subarray which is unsorted.

- In every iteration of selection sort, the minimum element (considering ascending order) from the unsorted subarray is picked and moved to the sorted subarray.

- It is very simple to implement but it does not go well with large number of inputs.

#LeetCode: Java Solution of Bitwise AND of Numbers Range problem

Bitwise AND of Numbers Range

Given a range [m, n] where 0 <= m <= n <= 2147483647, return the bitwise AND of all numbers in this range, inclusive.

Example 1: Input: [5,7], Output: 4

Example 2:Input: [0,1], Output: 0

Given a range [m, n] where 0 <= m <= n <= 2147483647, return the bitwise AND of all numbers in this range, inclusive.

Example 1: Input: [5,7], Output: 4

Example 2:Input: [0,1], Output: 0

Part 2.6: Leet Code Solutions For Java Coding Interview Round

Valid Parenthesis String

Given a string containing only three types of characters: '(', ')' and '*', write a function to check whether this string is valid. We define the validity of a string by these rules:

Given a string containing only three types of characters: '(', ')' and '*', write a function to check whether this string is valid. We define the validity of a string by these rules:

- Any left parenthesis '(' must have a corresponding right parenthesis ')'.

- Any right parenthesis ')' must have a corresponding left parenthesis '('.

- Left parenthesis '(' must go before the corresponding right parenthesis ')'.

- '*' could be treated as a single right parenthesis ')' or a single left parenthesis '(' or an empty string.

- An empty string is also valid.

Part 2.5: Leet Code Solutions For Java Coding Interview Round

Perform String Shifts

You are given a string s containing lowercase English letters, and a matrix shift, where shift[i] = [direction, amount]:

direction can be 0 (for left shift) or 1 (for right shift).

amount is the amount by which string s is to be shifted.

A left shift by 1 means remove the first character of s and append it to the end.

Similarly, a right shift by 1 means remove the last character of s and add it to the beginning.

Return the final string after all operations.

Example 1:

Input: s = "abc", shift = [[0,1],[1,2]]

Output: "cab"

Explanation:

[0,1] means shift to left by 1. "abc" -> "bca"

[1,2] means shift to right by 2. "bca" -> "cab"

Example 2:

Input: s = "abcdefg", shift = [[1,1],[1,1],[0,2],[1,3]]

Output: "efgabcd"

Explanation:

[1,1] means shift to right by 1. "abcdefg" -> "gabcdef"

[1,1] means shift to right by 1. "gabcdef" -> "fgabcde"

[0,2] means shift to left by 2. "fgabcde" -> "abcdefg"

[1,3] means shift to right by 3. "abcdefg" -> "efgabcd"

Constraints:

1 <= s.length <= 100

s only contains lower case English letters.

1 <= shift.length <= 100

shift[i].length == 2

0 <= shift[i][0] <= 1

0 <= shift[i][1] <= 100

GIT URL: Java Solution of Leet Code's Perform String Shifts problem

You are given a string s containing lowercase English letters, and a matrix shift, where shift[i] = [direction, amount]:

direction can be 0 (for left shift) or 1 (for right shift).

amount is the amount by which string s is to be shifted.

A left shift by 1 means remove the first character of s and append it to the end.

Similarly, a right shift by 1 means remove the last character of s and add it to the beginning.

Return the final string after all operations.

Example 1:

Input: s = "abc", shift = [[0,1],[1,2]]

Output: "cab"

Explanation:

[0,1] means shift to left by 1. "abc" -> "bca"

[1,2] means shift to right by 2. "bca" -> "cab"

Example 2:

Input: s = "abcdefg", shift = [[1,1],[1,1],[0,2],[1,3]]

Output: "efgabcd"

Explanation:

[1,1] means shift to right by 1. "abcdefg" -> "gabcdef"

[1,1] means shift to right by 1. "gabcdef" -> "fgabcde"

[0,2] means shift to left by 2. "fgabcde" -> "abcdefg"

[1,3] means shift to right by 3. "abcdefg" -> "efgabcd"

Constraints:

1 <= s.length <= 100

s only contains lower case English letters.

1 <= shift.length <= 100

shift[i].length == 2

0 <= shift[i][0] <= 1

0 <= shift[i][1] <= 100

GIT URL: Java Solution of Leet Code's Perform String Shifts problem

Java Solution

Last Stone Weight

We have a collection of stones, each stone has a positive integer weight.

Each turn, we choose the two heaviest stones and smash them together.

Suppose the stones have weights x and y with x <= y. The result of this smash is:

If x == y, both stones are totally destroyed;

If x != y, the stone of weight x is totally destroyed, and the stone of weight y has new weight y-x.

At the end, there is at most 1 stone left. Return the weight of this stone (or 0 if there are no stones left.)

Example 1:

Input: [2,7,4,1,8,1]

Output: 1

Explanation:

We combine 7 and 8 to get 1 so the array converts to [2,4,1,1,1] then,

we combine 2 and 4 to get 2 so the array converts to [2,1,1,1] then,

we combine 2 and 1 to get 1 so the array converts to [1,1,1] then,

we combine 1 and 1 to get 0 so the array converts to [1] then that's the value of last stone.

Note:

1 <= stones.length <= 30

1 <= stones[i] <= 1000

GIT URL: Java Solution of Leet Code's Last Stone Weight problem

-K Himaanshu Shuklaa..Last Stone Weight

We have a collection of stones, each stone has a positive integer weight.

Each turn, we choose the two heaviest stones and smash them together.

Suppose the stones have weights x and y with x <= y. The result of this smash is:

If x == y, both stones are totally destroyed;

If x != y, the stone of weight x is totally destroyed, and the stone of weight y has new weight y-x.

At the end, there is at most 1 stone left. Return the weight of this stone (or 0 if there are no stones left.)

Example 1:

Input: [2,7,4,1,8,1]

Output: 1

Explanation:

We combine 7 and 8 to get 1 so the array converts to [2,4,1,1,1] then,

we combine 2 and 4 to get 2 so the array converts to [2,1,1,1] then,

we combine 2 and 1 to get 1 so the array converts to [1,1,1] then,

we combine 1 and 1 to get 0 so the array converts to [1] then that's the value of last stone.

Note:

1 <= stones.length <= 30

1 <= stones[i] <= 1000

GIT URL: Java Solution of Leet Code's Last Stone Weight problem

Java Solution

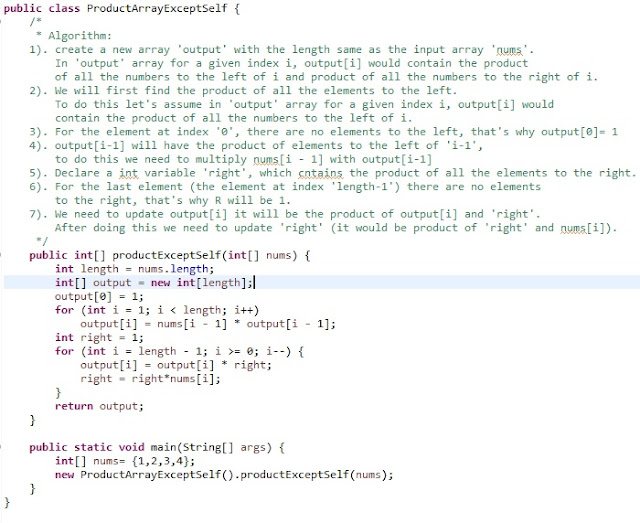

Product of Array Except Self

Given an array nums of n integers where n > 1, return an array output such that output[i] is equal to the product of all the elements of nums except nums[i].

Example:

Input: [1,2,3,4]

Output: [24,12,8,6]

Constraint: It's guaranteed that the product of the elements of any prefix or suffix of the array (including the whole array) fits in a 32 bit integer.

Note: Please solve it without division and in O(n).

Algorithm:

1). create a new array 'output' with the length same as the input array 'nums'. In 'output' array for a given index i, output[i] would contain the product of all the numbers to the left of i and product of all the numbers to the right of i.

2). We will first find the product of all the elements to the left. To do this let's assume in 'output' array for a given index i, output[i] would contain the product of all the numbers to the left of i.

3). For the element at index '0', there are no elements to the left, that's why output[0]= 1

4). output[i-1] will have the product of elements to the left of 'i-1', to do this we need to multiply nums[i - 1] with output[i-1]

5). Declare a int variable 'right', which cntains the product of all the elements to the right.

6). For the last element (the element at index 'length-1') there are no elements to the right, that's why R will be 1.

7). We need to update output[i] it will be the product of output[i] and 'right'. After doing this we need to update 'right' (it would be product of 'right' and nums[i]).

GIT URL: Java Solution of Leet Code's Product of Array Except Self problem

Product of Array Except Self

Given an array nums of n integers where n > 1, return an array output such that output[i] is equal to the product of all the elements of nums except nums[i].

Example:

Input: [1,2,3,4]

Output: [24,12,8,6]

Constraint: It's guaranteed that the product of the elements of any prefix or suffix of the array (including the whole array) fits in a 32 bit integer.

Note: Please solve it without division and in O(n).

Algorithm:

1). create a new array 'output' with the length same as the input array 'nums'. In 'output' array for a given index i, output[i] would contain the product of all the numbers to the left of i and product of all the numbers to the right of i.

2). We will first find the product of all the elements to the left. To do this let's assume in 'output' array for a given index i, output[i] would contain the product of all the numbers to the left of i.

3). For the element at index '0', there are no elements to the left, that's why output[0]= 1

4). output[i-1] will have the product of elements to the left of 'i-1', to do this we need to multiply nums[i - 1] with output[i-1]

5). Declare a int variable 'right', which cntains the product of all the elements to the right.

6). For the last element (the element at index 'length-1') there are no elements to the right, that's why R will be 1.

7). We need to update output[i] it will be the product of output[i] and 'right'. After doing this we need to update 'right' (it would be product of 'right' and nums[i]).

GIT URL: Java Solution of Leet Code's Product of Array Except Self problem

Part 2.4: Leet Code Solutions For Java Coding Interview Round

Length of Last Word

Given a string s consists of upper/lower-case alphabets and empty space characters ' ', return the length of last word (last word means the last appearing word if we loop from left to right) in the string.

Given a string s consists of upper/lower-case alphabets and empty space characters ' ', return the length of last word (last word means the last appearing word if we loop from left to right) in the string.

Part 2.3: Leet Code Solutions For Java Coding Interview Round

Counting Elements

Given an integer array arr, count element x such that x + 1 is also in arr. If there're duplicates in arr, count them separately.

Given an integer array arr, count element x such that x + 1 is also in arr. If there're duplicates in arr, count them separately.

Part 2.2: Leet Code Solutions For Java Coding Interview Round

Single Number

Given a non-empty array of integers, every element appears twice except for one. Find that single one.

Note: Your algorithm should have a linear runtime complexity. Could you implement it without using extra memory?

Example 1

Input: [2,2,1]. Output: 1

Example 2

Input: [4,1,2,1,2]. Output: 4

Given a non-empty array of integers, every element appears twice except for one. Find that single one.

Note: Your algorithm should have a linear runtime complexity. Could you implement it without using extra memory?

Example 1

Input: [2,2,1]. Output: 1

Example 2

Input: [4,1,2,1,2]. Output: 4

Part 2.1: Leet Code Solutions For Java Coding Interview Round

1). Remove K Digits

Given a non-negative integer number represented as a string, remove k digits from the number so that the new number is the smallest possible.

Given a non-negative integer number represented as a string, remove k digits from the number so that the new number is the smallest possible.

Part 1: Java Algorithms For Coding Interview Round

Two Sum

Given an array of integers, return indices of the two numbers such that they add up to a specific target. Let us assume that each input would have exactly one solution, and you may not use the same element twice. e.g: nums = [1,2,8,9], target = 9, it will return [0,8]

Given an array of integers, return indices of the two numbers such that they add up to a specific target. Let us assume that each input would have exactly one solution, and you may not use the same element twice. e.g: nums = [1,2,8,9], target = 9, it will return [0,8]

November 23, 2019

November 22, 2019

Rio Kapadia and Gargi Patel bagged Ullu App's upcoming series 'Kasak'

November 21, 2019

November 16, 2019

#JNUFact: Is the fee hike in JNU justified?

Total Number Of Students, Departments and courses offered in JNU

Let's first start with the number of students in JNU and number of departments , along with the courses offered. Here is how it looks:

Total number of students in JNU=8000.

Between the two categories, we have 72% of the campus covered:

Let's first start with the number of students in JNU and number of departments , along with the courses offered. Here is how it looks:

Total number of students in JNU=8000.

Between the two categories, we have 72% of the campus covered:

- 4578 students (57%) are from social sciences, language, literature & arts.

- 1210 students (15%) are from International studies.

November 14, 2019

Preetisheel Singh basks in the 'shining' glory of Bala

Fresh from the super success of Housefull 4 and appreciation for her work for Ujda Chaman, ace makeup, hair and prosthetic character designer Preetisheel Singh is basking in the glory of critical acclaim for her latest film Bala... or should we say, for having constructed Ayushmann Khurrana's shining baldpate. Tch tch! ;-)

November 12, 2019

Sharhaan Singh roped in for Ullu App's 'Kasak'

November 11, 2019

#Cassandra Part 4

Command To Create Keyspace

CREATE KEYSPACE EmployeeDetails

WITH replication = {'class':'SimpleStrategy', 'replication_factor' : 3};

To verify whether the table is created or not we can use the command Describe. If we use this command over keyspaces, it will display all the keyspaces created.

DESCRIBE keyspaces;

CREATE KEYSPACE EmployeeDetails

WITH replication = {'class':'SimpleStrategy', 'replication_factor' : 3};

To verify whether the table is created or not we can use the command Describe. If we use this command over keyspaces, it will display all the keyspaces created.

DESCRIBE keyspaces;

#Cassandra Part 3

Define commit log.

It is a mechanism that is used to recover data in case the database crashes. Every operation that is carried out is saved in the commit log. Using this the data can be recovered.

It is a mechanism that is used to recover data in case the database crashes. Every operation that is carried out is saved in the commit log. Using this the data can be recovered.

#Cassandra Part 2 : Data Model, Keys, Ring Representation, Virtual Nodes

Cassandra

- Cassandra is an open source, distributed database from Apache that is highly scalable and designed to manage very large amounts of structured data.

- Cassandra is made to easily deploy over a cluster of machines located at geographically different places.

- There is no master slave or central master server, so no single point of failure, no bottleneck, data is replicated, and a faulty node can be replaced without any downtime.

- Cassandra is linearly scalable, which means that with more nodes, the requests served per second per node will not go down. Also, the total throughput of the system will increase with each node being added.

- Cassandra is column oriented, much like a map (or better, a map of sorted maps) or a table with flexible columns where each column is essentially a key-value pair. So, we can add columns as we go, and each row can have a different set of columns (key-value).

- Cassandra does not provide any relational integrity. It is up to the application developer to perform relation management.

- It is a type of NoSQL database.

- Apache HBase and MongoDB are quite popular NoSQL databases besides Cassandra.

- It is widely used in Netflix, eBay, GitHub, Facebook, Twitter etc.

- Cassandra does automatic partitioning and replication.

What is the main objective of creating Cassandra?

The main objective of Cassandra is to handle a large amount of data. Furthermore, the objective also ensures fault tolerance with the swift transfer of data.

#Cassandra Part 1: NoSQLDatabase

NoSQLDatabase

- A NoSQL database is sometimes called as Not Only SQL. It is a database that provides a mechanism to store and retrieve data other than the tabular relations used in relational databases.

- These type databases are schema-free, support easy replication, have simple API, eventually consistent, and can handle huge amounts of data.

- Primary objective of a NoSQL database is to have: simplicity of design, horizontal scaling, and finer control over availability.

- SQL was designed to be a query language for relational databases, and relational databases are usually table- based, much like what we see in a spreadsheet. In a relational database, records are stored in rows and then the columns represent fields in each row. SQL allows us to query within and between tables in that relational database.

- On the other hand, NoSQL databases are more flexible, NoSQL databases allow us to define fields as we create a record.

- Nested values are common in NoSQL databases. We can have hashes and arrays and objects, and then nest more objects and arrays and hashes within those.

- Also fields are not standardized between records in NoSQL databases, we can have a different structure for every record in your NoSQL database.

November 08, 2019

देवउठनी एकादशी पर क्यों होता है तुलसी विवाह?

आज कार्तिक मास के शुक्ल पक्ष की एकादशी है। कहते है भगवान विष्णु अपनी चार महीने की निद्रा से आज उठते है इसीलिए इसे देवउठनी एकादशी या प्रबोधिनी एकादशी भी कहा जाता है ।

भगवान विष्णु को जगाने का मंत्र

भगवान् को निंद्रा से उठाने के लिए इस मन्त्र का जप करें, अगर उच्चारण न कर पाए तो 'उठो देवा, जागो देवा, बैठो देवा' कहकर श्रीनारायण को उठाएं।

उत्तिष्ठ गोविन्द त्यज निद्रां जगत्पतये। त्वयि सुप्ते जगन्नाथ जगत् सुप्तं भवेदिदम्॥

उत्थिते चेष्टते सर्वमुत्तिष्ठोत्तिष्ठ माधव। गतामेघा वियच्चैव निर्मलं निर्मलादिशः॥

शारदानि च पुष्पाणि गृहाण मम केशव।

प्रबोधिनी एकादशी के दिन तुलसी जी का विवाह भगवान शालिग्राम के साथ करवाने की भी परंपरा है।

Subscribe to:

Posts (Atom)